Fraud Detection - Detecting fraudulous traffic on a video-sharing website

Context

Video-sharing websites, such as Youtube or Dailymotion, are facing a new challenge: the increase of bot traffic which jeopardizes the very business model of these platforms. Indeed, these platforms display ads for each video viewed. Each ad publisher is paying an amount per view, and on the other side, video publishers are getting financially rewarded after reaching a high number of views. Advertisers do not want to pay for non human views whereas video-publishers could try to maximize their revenue by creating fake traffic on the website. Our client, a top video-sharing platform reached 15% of bot traffic. A lot of advertisers decided to stop investing on this platform due to the low return on investment they could get from this website.

What did we do ?

The video platform consulted MFG Labs to build an algorithm which would detect fraudulent traffic every day, and help them retain their ad revenues.

Solution

Understand bot traffic specificities

After retrieving ad-centric, video, account and IP data, the first step was to detect bot traffic among the datasets. An exploratory analysis of the KPIs time series highlighted unusual behaviors. A selection of KPIs enabled to manually find fraudulent video, IP and account: the first insight we got was that all bots do not have the same behavior. MFG Labs team thus built a set of bot profiles.

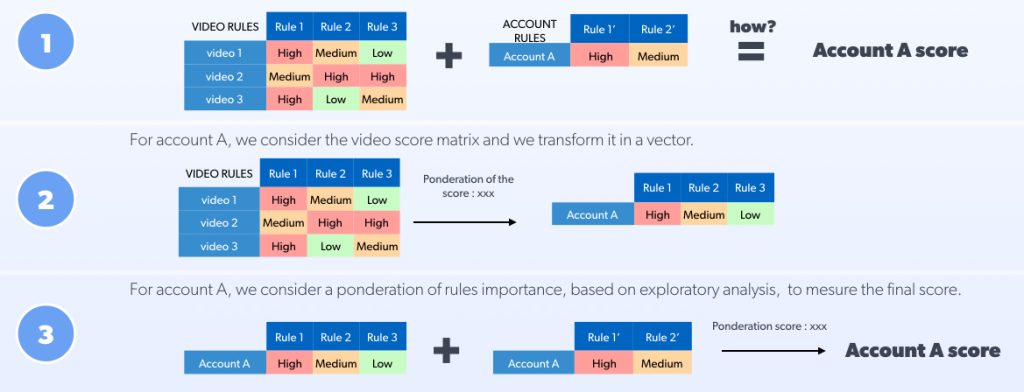

List a set of bot detection rules

The exploratory analysis provided a set a KPIs to watch and a list of bot profiles. By combining the two outputs, we could define bot detection rules. Each rule gave a fraud score, either on a video or on an account level. We then computed a final fraud score for each account.

Detect remaining bots with a non supervised algorithm

While the rules detected a high number of fraudulent accounts, it was not complete, some bots felt through the cracks. Therefore, MFG Labs decided to build a clustering algorithm based on time series video KPI. This method highlighted the remaining fraudulent accounts.

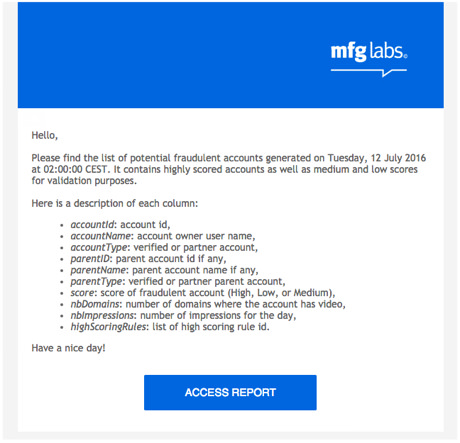

Automation for a daily detection

Our prototype was made of two distinct methods: detection rules and clustering algorithm. The measure of the performance was made directly by the platform: we extracted the list of high fraudulent score accounts, the platform had to check with its own method if the accounts had to be blacklisted. Our prototype reached a high performance. We thus had to automate the process in order to daily send emails with a list of new fraudulent accounts. The publisher would then blacklist theses accounts.

Results

In only a few weeks, our team was able to develop a set of algorithms and demonstrate a high value use case:

- Thanks to an algorithm running on fresh data able to detect a list of fraudulous accounts twice a day.

- Our client was able to highly reduce bot traffic (divided by 5!), give confidence back to ad publishers, and securize its ad revenues.